Hi, I’m Philip Phuc Nguyen, a software engineer and a proud dad.

I started my 10-year career as a full-stack developer and later had other fancy titles such as senior engineer, tech lead, supreme commander, etc., but I mostly consider myself an explorer of ideas.

My favorite kind of work is when there’re unconventional problems and/or constraints that can’t be solved by established good practices and patterns. I enjoy researching, experimenting and leading a team towards a novel solution for those challenges. Over the past 10 years I’ve designed solutions and architectures that transformed entire systems, improved performance by 10x, and reduced annual infrastructure cost by hundreds of thousands of dollars. Some of these projects are mentioned below.

On the personal side, I often think of myself as a team facilitator. I had experience in almost all typical positions in tech companies (including being the founder of one) and can be a good collaborator, mentor or leader in different circumstances. I value team and engineering culture, and in fact consider it my #1 priority in evaluating a job opportunity.

Outside of tech I enjoy books, movies (from Hayao Miyazaki and Christopher Nolan), badminton and spending time with my family.

Notable Projects

I have a keen interest in the Meteor and Node.js ecosystem, so most of the projects below fall in that category.

Change Streams System

The Change Streams System was introduced in my Meteor Impact 2020 talk.

Challenge

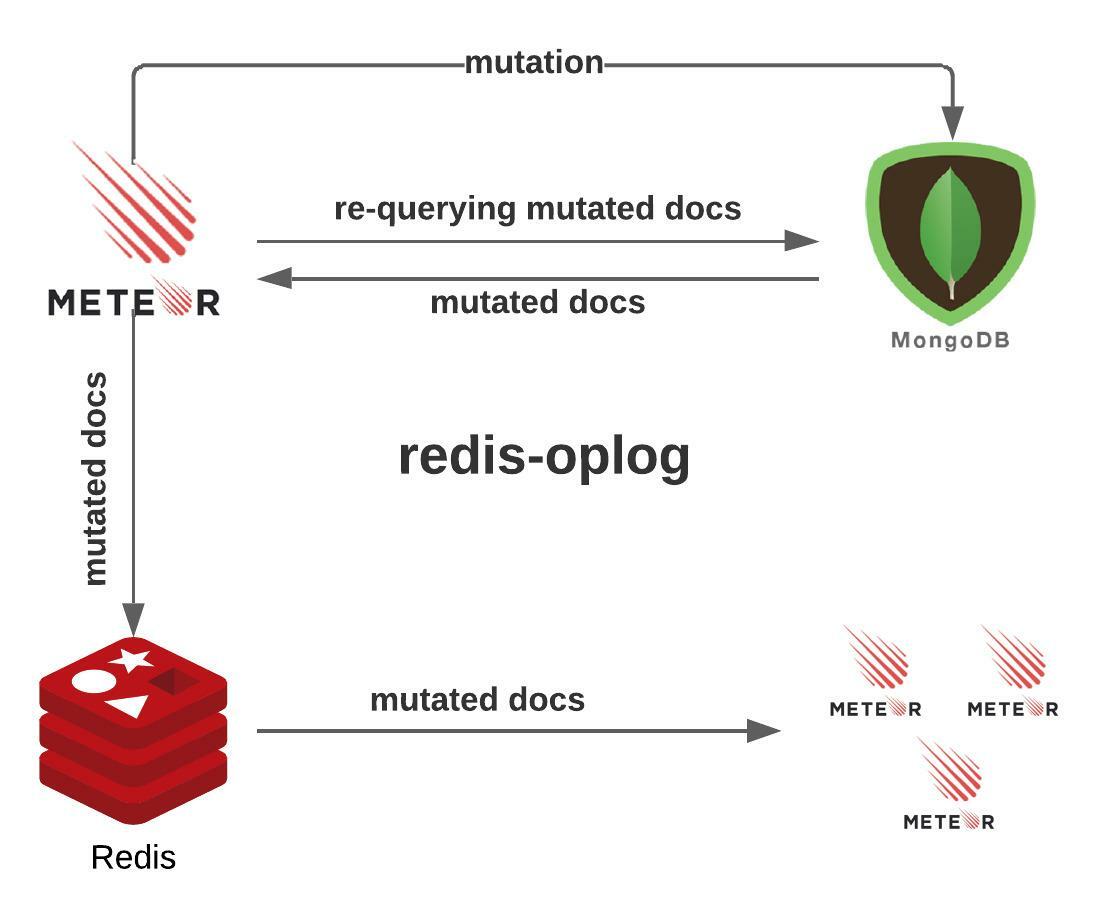

As a platform for organizing large-scale online and hybrid events, Pathable (one

of Meteor Cloud’s biggest customers) faced severe performance issues originating

from the way Meteor interacted with the MongoDB oplog. We switched to a

well-known alternative - redis-oplog, but soon realized a major drawback of

this library: Because redis-oplog can’t reliably determine the outcome of a

mutation, it has to perform an extra database fetch after each mutation before

dispatching that change to Redis:

This mechanism consumes database resources unnecessarily and also potentially introduces race condition.

Solution

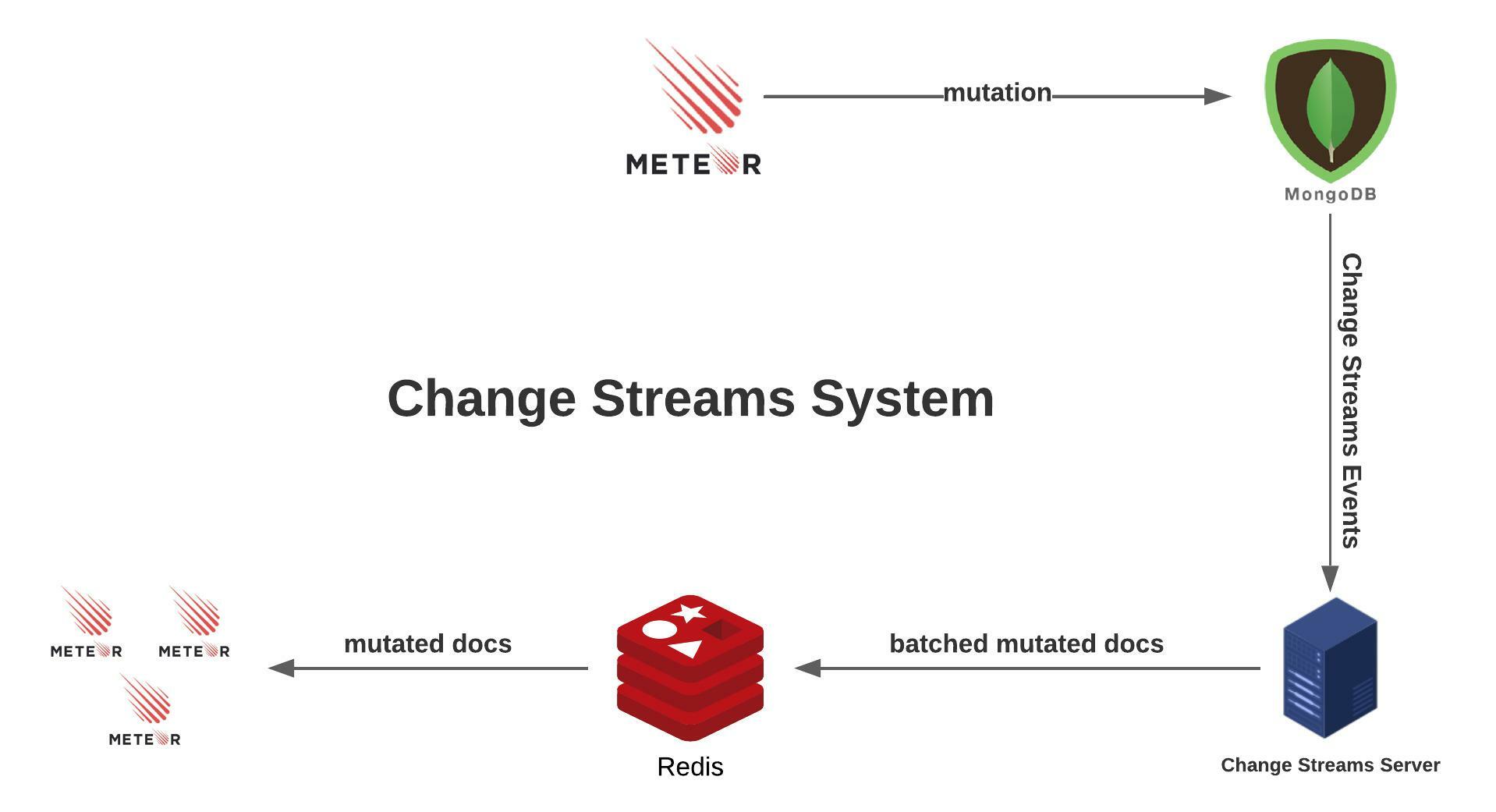

I designed an alternative architecture called Change Streams System (CSS) to

replace redis-oplog. In CSS, changes made to the database are captured by a

Node.js server by listening to MongoDB Change Streams which doesn’t introduce

any significant database overhead:

Moreover, before pushing the changes to Redis, the server also processes and merges similar mutations that occur in a short period of time (a common mutation pattern in Pathable), which reduces Redis load significantly.

Outcome

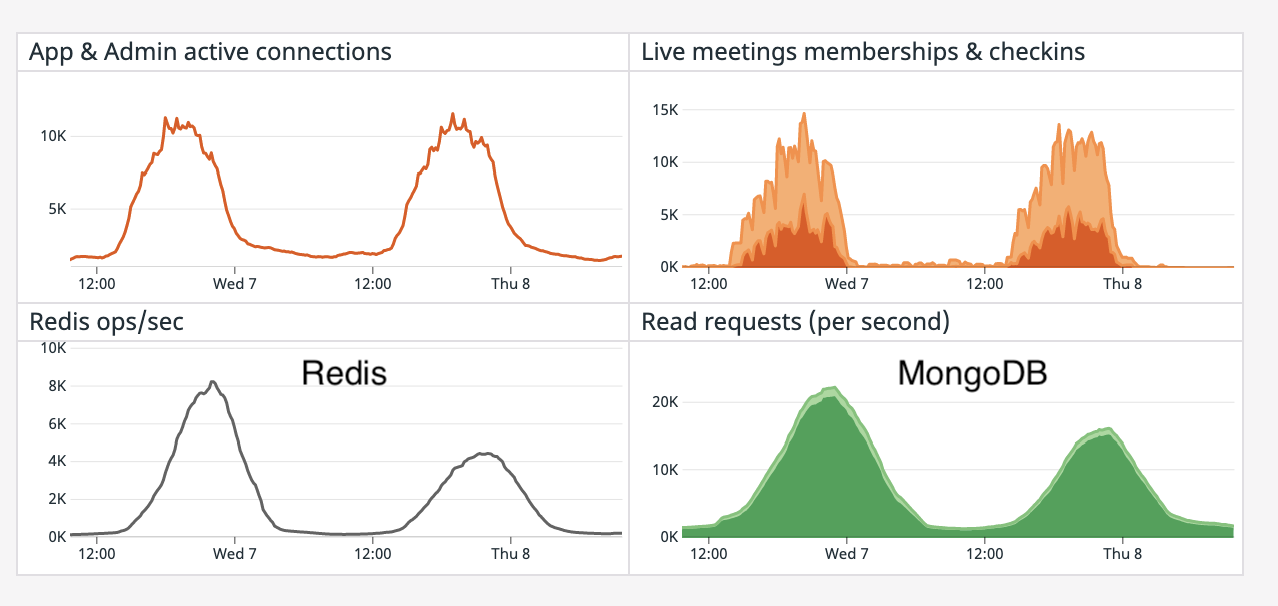

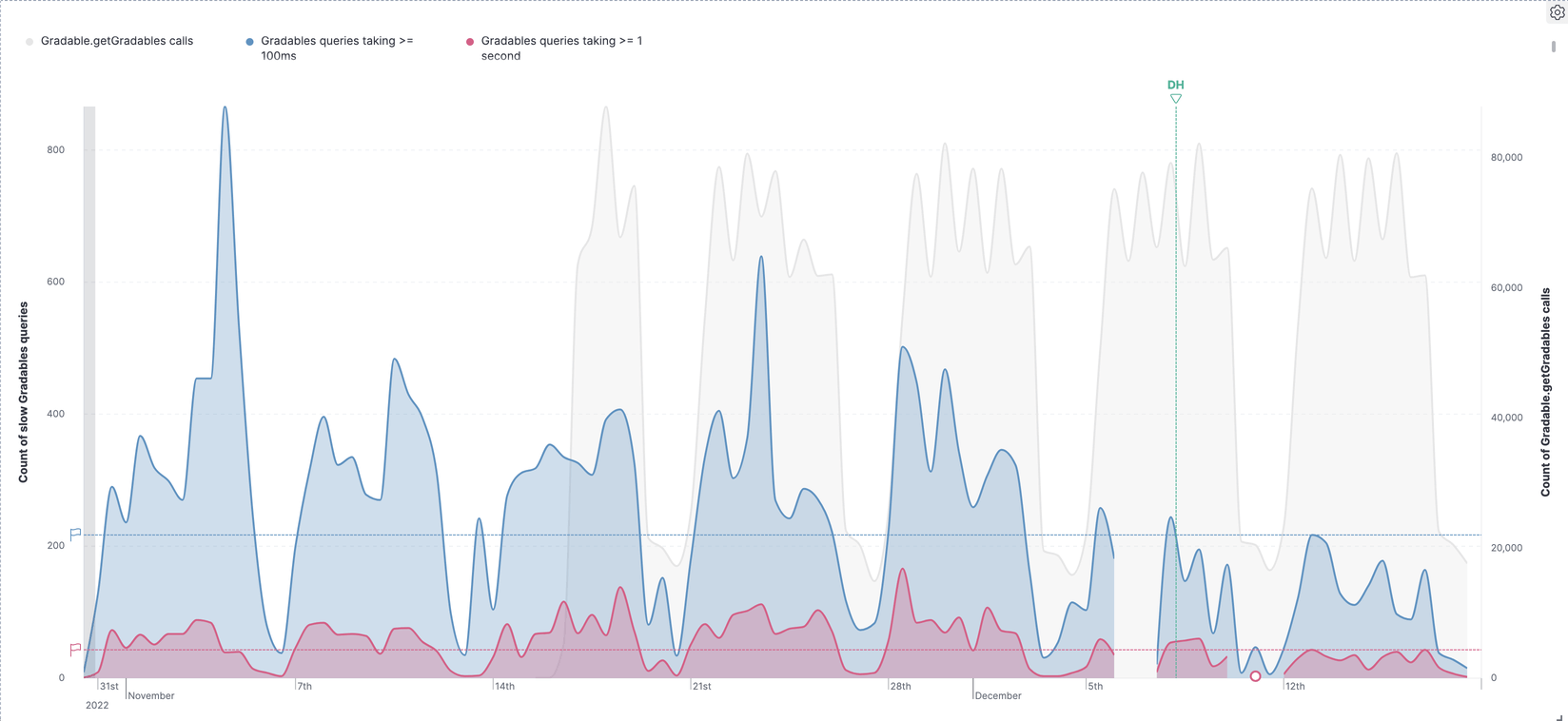

The charts above show metrics of the same Pathable event on two consecutive days with similar level of online user activity, the first day without CSS and the second day with CSS enabled.

On average CSS resulted in a 50% reduction in Redis usage, a 30% reduction in MongoDB usage and helped save hundreds of thousands of dollars in annual infrastructure cost.

Outsmarting The MongoDB Query Planner

Challenge

MaestroQA was struggling with a database query performance issue where queries on a highly active collection took a long time, from seconds to minutes to complete. The collection had close to 100 million documents and more than 20 indexes.

Established practices in MongoDB queries and indexes optimization didn’t help. Caching was not applicable to the use case either.

By performing extensive benchmarking, I realized that during the execution of long-running queries the part taking most of the time is index selection. Apparently the MongoDB Query Planner couldn’t efficiently handle such a complex indexes structure. That said we couldn’t reduce the number of indexes because the range of query patterns on the collection was so diverse that removing any existing index would cause a large number of COLLSCANs.

Solution

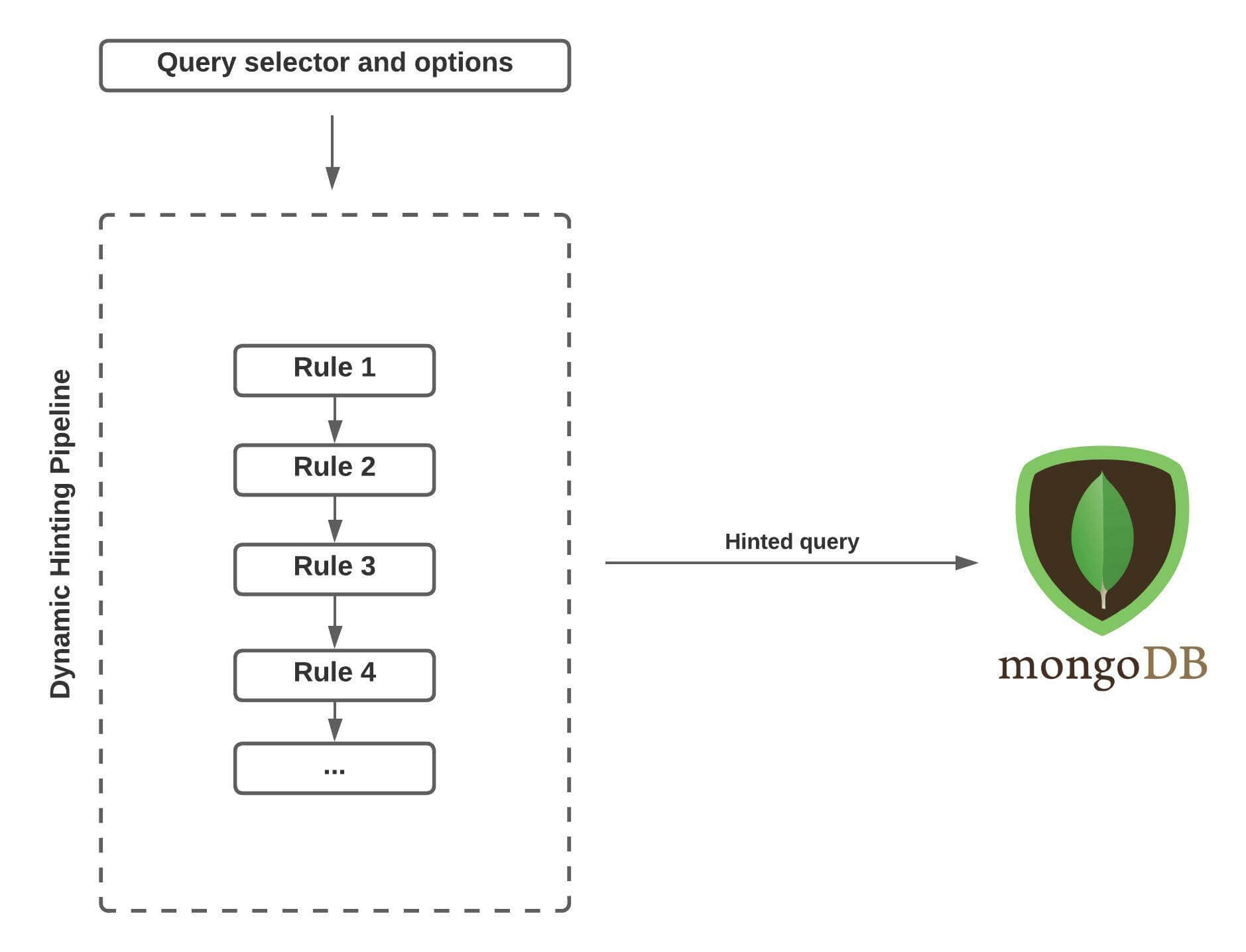

I experimented with an idea called Dynamic Hinting where we attempted to do the

job of the MongoDB Query Planner: Selecting an appropriate index at the

application level and adding a hint to the query before sending it to MongoDB,

effectively bypassing the Query Planner.

In order to pick indexes for our sophisticated queries, we implement a Lambda that periodically pulls slow query logs from Atlas and pushes them to Elastic for analysis purposes.

By thoroughly analyzing the patterns of slow queries, their selectors, options, and the duration they took, we were able to come up with a set of “dynamic hinting rules” and use them to build a pipeline that can confidently suggest an ideal index for various query patterns (or just fall back to the Query Planner when there aren’t enough data and confidence to make an index decision).

Outcome

The experiment resulted in encouraging performance gain, where the number of

queries taking more than 100ms was reduced by 58%

and the number of queries taking more than 1 second was reduced by 61%.

(The vertical green "DH" line indicates the time Dynamic Hinting was released)

Dynamic Hinting still has a lot of potentials, and we expect to increase both the coverage and the aggressiveness of hinting rules as we get more data and insights after the initial release.

Serverless Pub/Sub for Meteor

Challenge

Pub/Sub has always been both the strength and weakness of Meteor. While the feature allows developers to build reactive apps effortlessly, its performance suffers when implemented at scale.

Meteor Pub/Sub doesn’t scale well because there’s too much overhead to maintain a publication observer and that overhead increases exponentially with the number of clients, especially when subscribe patterns are diverse and observers can’t be reused.

Solution

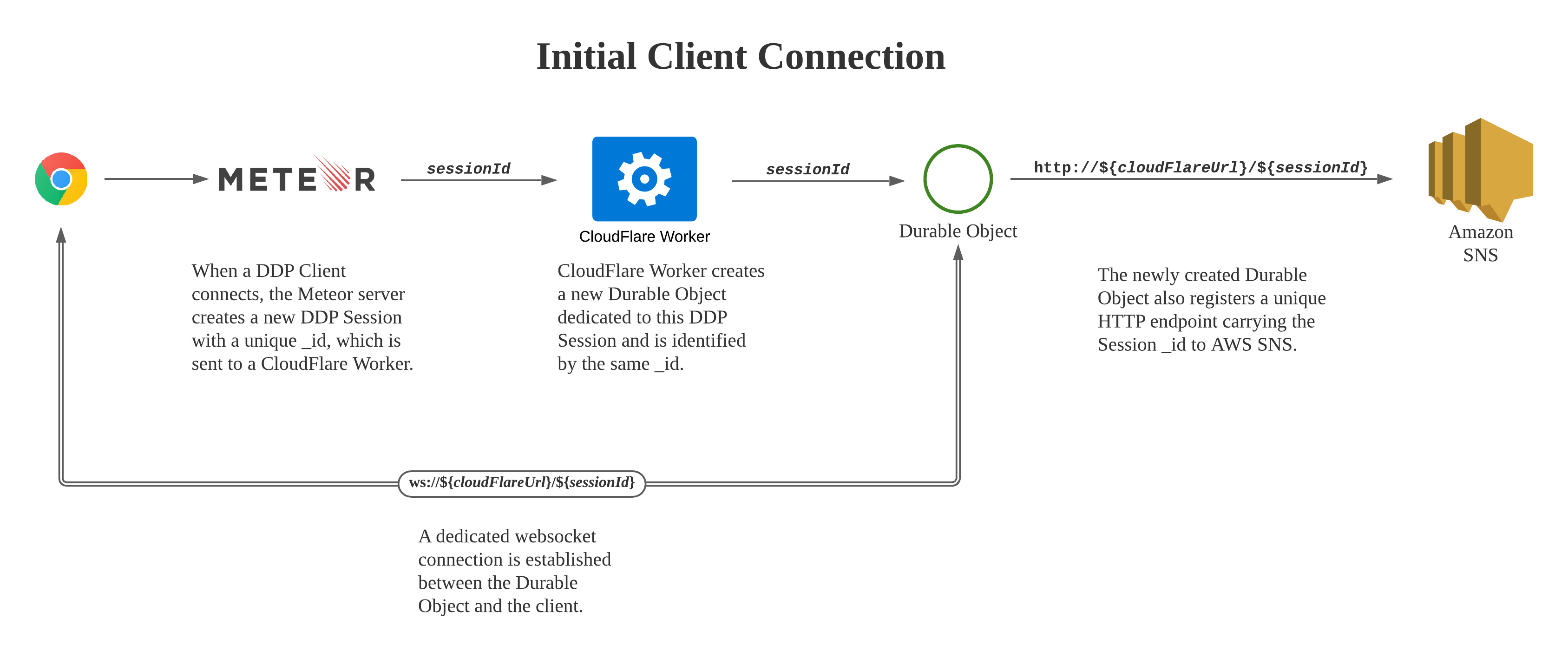

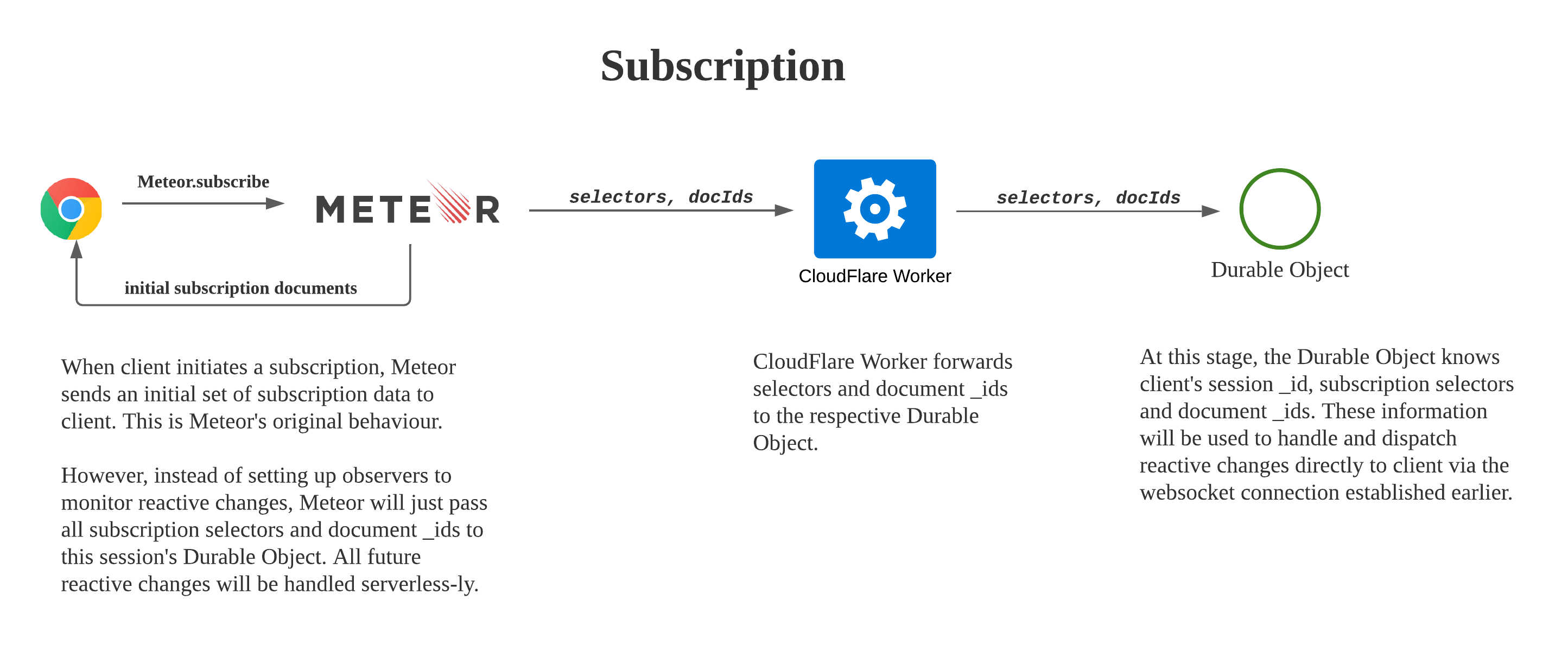

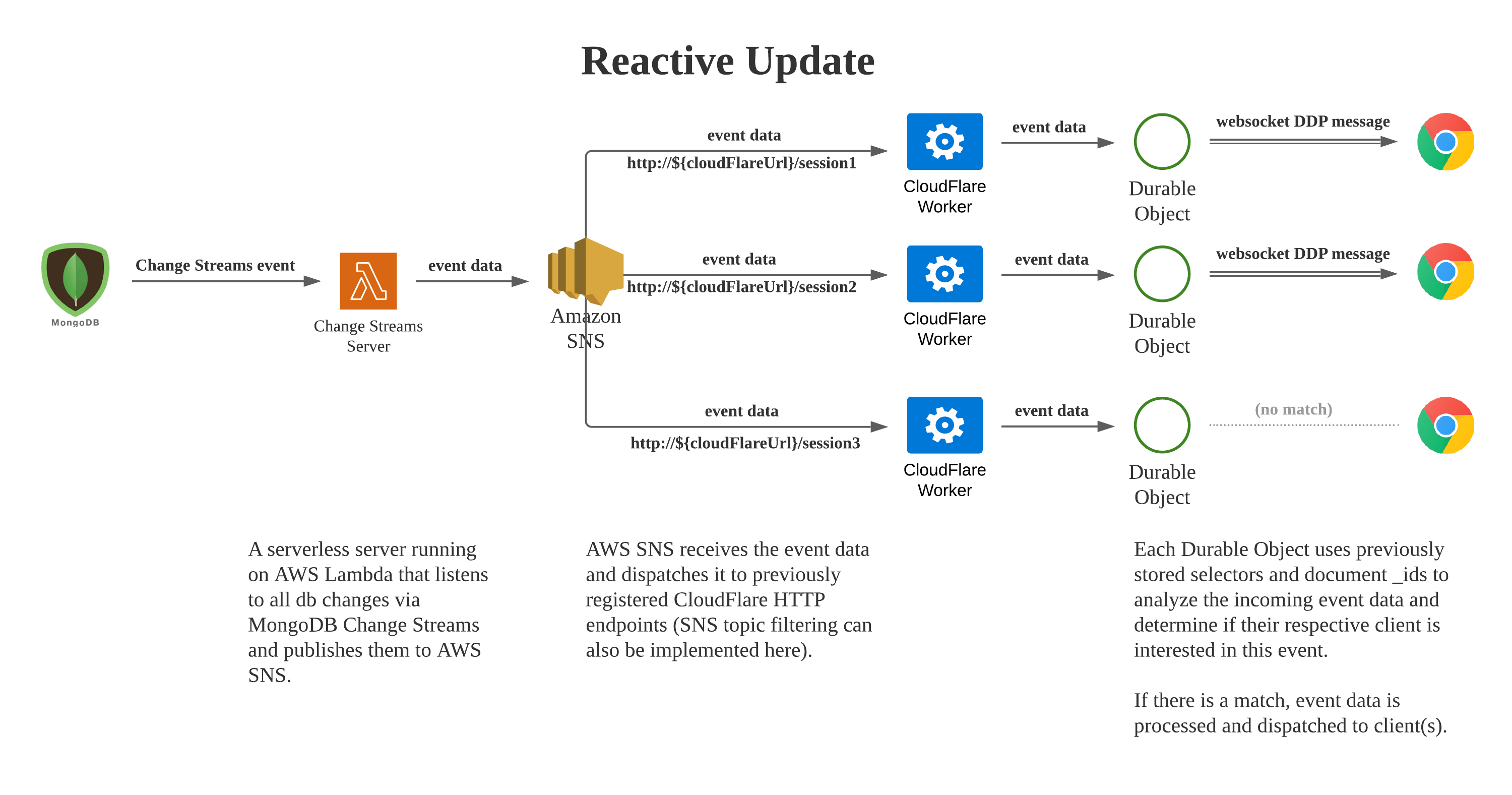

To address that scaling weakness of Meteor, I’ve been working on an experimental architecture called serverless-oplog, built on top of technologies such as Cloudflare Workers, Cloudflare Durable Objects and MongoDB Change Streams.

serverless-oplog handles Meteor Pub/Sub workload serverless-ly

by assigning a dedicated Durable Object to each DDP client and having all of the client’s

Pub/Sub activities processed by the Durable Object. As new clients connect, new Durable Objects

will be spun up, giving us infinite scalability.

On the Meteor side, the server just needs to serve the initial data fetched from publish cursors (which will also be offloaded to Cloudflare in a future version of serverless-oplog). There is no observer created at all, which means we have basically migrated the entire Pub/Sub overhead away from the Meteor server!

The architecture is illustrated in the following diagrams:

Outcome

A working prototype is already available!

serverless-oplog-demo.meteorapp.com

I’ll continue working on the project to make it ready for production and prepare for a public release soon.

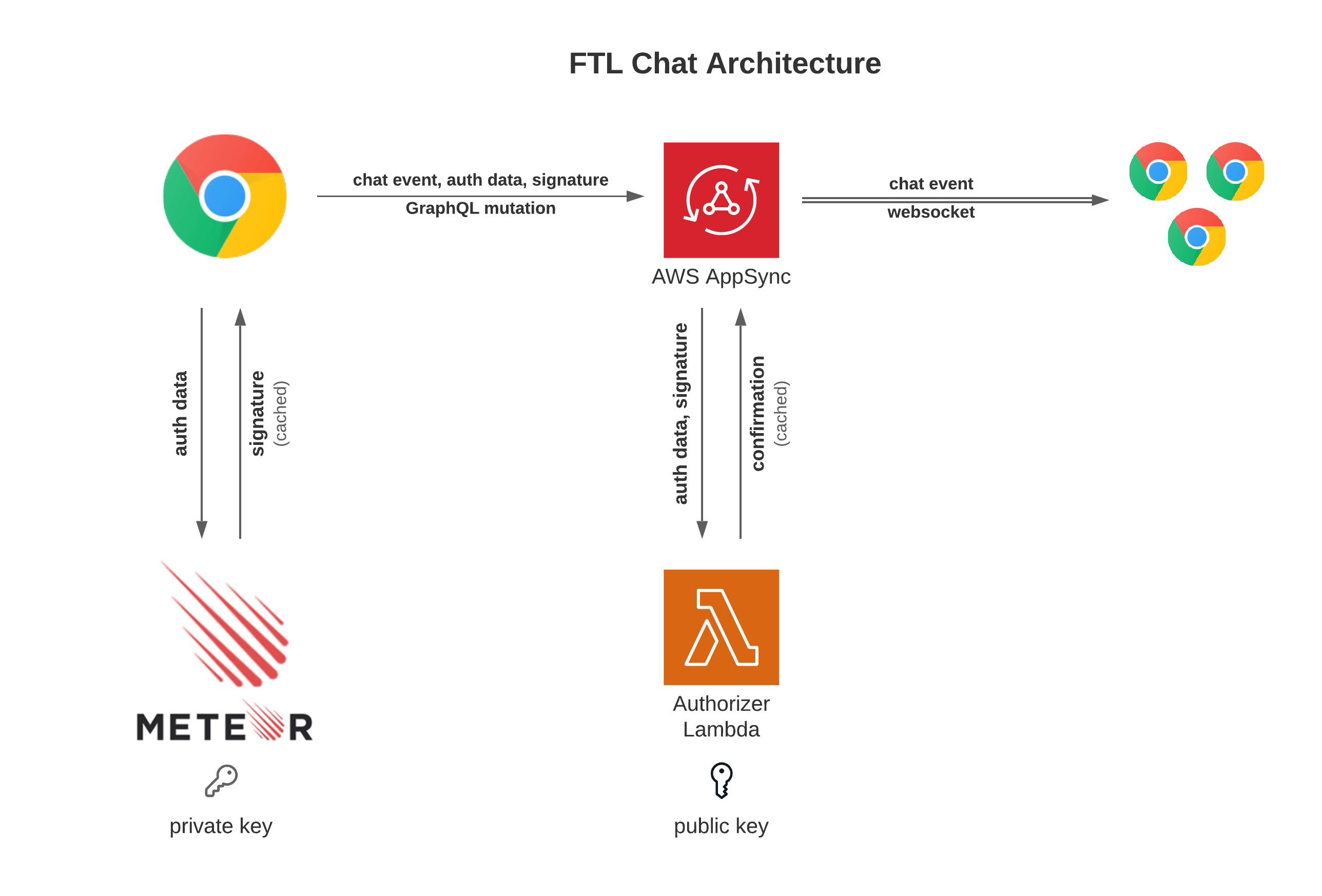

Faster Than Light Chat System (FTL Chat)

Challenge

Despite having an indeed funny name, FTL Chat was an interesting project where we were facing a time-constrained challenge: A very large-scale event (up to 60k participants) was contracted and one of their main priorities is online chat. Our chat system at the time was built on top of Meteor Pub/Sub, and by benchmarking we realized that the system wouldn’t be able to handle more than 1k users chatting at the same time across different chat rooms.

Solution

Since the event contract came at a short notice, we had to think of solutions to offload chat overhead away from our Meteor servers entirely, since it would take a long time to optimize Meteor for this kind of load.

I proposed an idea about using AWS AppSync as the new backend for chat, which eventually became the architecture for the FTL Chat project:

A novel feature of FTL Chat is its ability to integrate seamlessly with the Meteor accounts system by using public-key cryptography and AWS Lambda, with an authentication caching strategy that introduces minimal performance impact to the Meteor servers.

Outcome

FTL Chat effortlessly served 10k simultaneous chatters in the event and eventually replaced the old chat system in production.

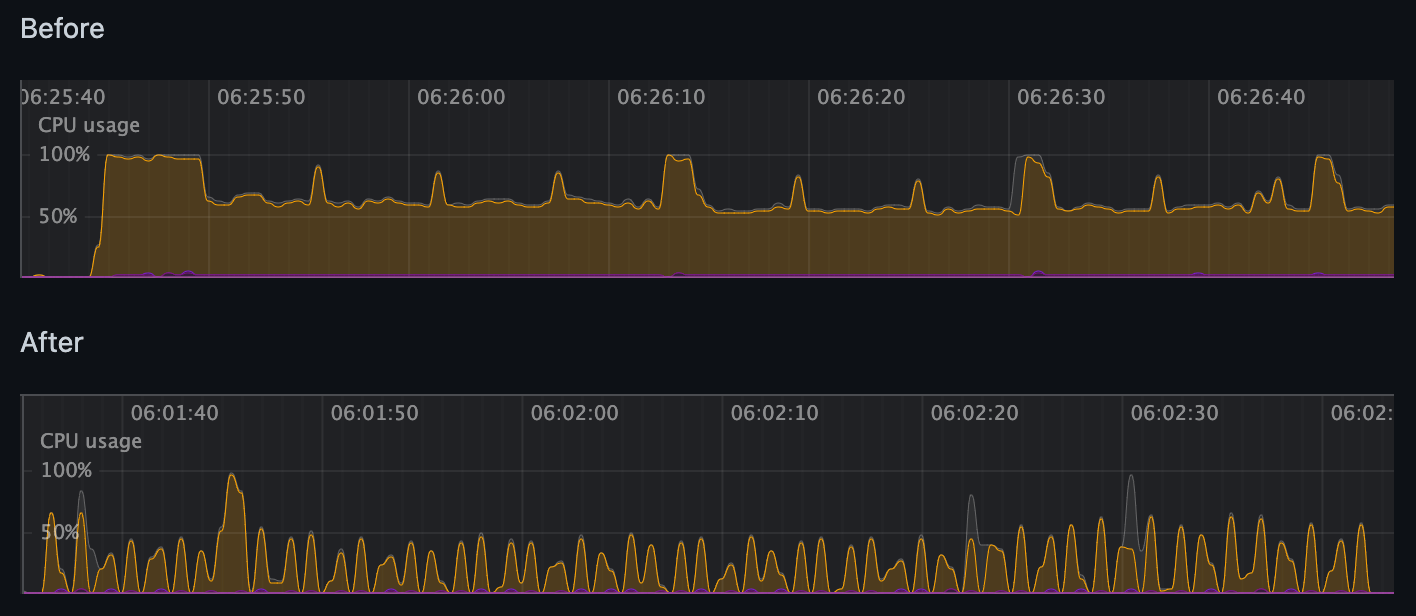

Throttled Meteor Autorun

Challenge

During a large online event we encountered a client-side performance problem where browser CPU was constantly pegged, severely affecting UX. It turned out the reactive computations underlying our UI were being rerun too fast because of very high volume of incoming DDP messages.

Solution

Instead of limiting or even turning off reactivity (which was undesirable for the event),

I experimented with the idea of throttling Meteor’s autorun computations. Interestingly,

simply passing a throttled function to Tracker.autorun didn’t work. I eventually

found a way by diving into the tracker package and modifying how subsequent

recomputations were called, effectively resulting in a throttled autorun implementation.

Outcome

Client-side CPU usage improved significantly:

Other notable open-sourced projects

-

pub-sub-lite: Simulating Pub/Sub with Meteor methods.

-

ddp-health-check: DDP performance monitoring for Meteor.

-

meteor-typescript-import-transform: Fixing deprecated legacy TypeScript patterns that no longer work with the official Meteor

typescriptpackage.